Update 24 June 2016

For future predictions I plan on adding a confidence level, so when you have such a small margin in a prediction result on a binary event it enables a more quantitative analysis.

Update 15 June 2016

Change in prediction. After reassessing the data now the final campaigns have pushed through, the vote is showing as a slight leave. During this campaign the data has been very turbulent and did show the gap narrow but still with a remain vote. It’s been interesting that a large percentage of a population can be undecided and swayed by the media.

Overview

I’ll detail at a macro level how my platform can automatically predict in real-time the outcome of the upcoming British EU exit vote (BREXIT). It has been previously used in a similar manner to predict US and British election results within less than 1% error rate. The components, advanced systems, and algorithms used to fully achieve this will be detailed in this paper at the business usage level to allow the reader to understand other areas of use for the platform.

How it’s done (in brief)

The main processes involved in predicating future outcomes are

• Efficiently collect documents

• Extract and normalize the data with the documents

• Analyze and machine understand the normalized data

• Cross-correlate the data and run advanced algorithms

• Produce the present the prediction

This is all presented instantly and achieved with low latency. Each section listed has unique challenges and these will be outlined in the next section.

How it’s done (the science)

Efficiently collect document

Collecting documents efficiently provides many challenges. For the case of the BREXIT the documents are in the form of news articles collected from the internet. Internet web scrapers search the Internet and social media for news articles, and index these articles much in the same way google indexes the web. The scrapers run in parallel on multiple different cloud computing providers using low cost micro instances. These micro instances normalize and compress the data before sending to a central transactional server farm. This scheme provides global low cost redundancy whilst allowing for a linear cost over latency, i.e. the more micro instances, the more articles are indexed per second, but for more cost. Currently the scrapers running on 8 instances find and index 10 unique articles per second.

It’s currently indexing over 560,000 global news sources. For comparison Googles indexes ~25,000 for it’s news service. This is all in real-time with low latency, as having the latest breaking news is always critical.

Extract and normalize the data with the documents

First the meaningfully text from the web page is extracted and then run through Natural Language Processing (NLP) algorithms. This process is where the machine understands as close as possible to a human understanding of the entity types and context they relate to. The system auto discovers new entities along with their aliases, an example for this case would be “Brexit”, “British EU exit”, “GB EU exit” etc. These terms all mean the same thing and so the system should process them as a single entity. These entities and relationships are then placed into a custom built distributed graph database. The graph sits on top of Cassandra and also provides a linearly scalable data size and concurrent read/write access speed.

Analyze and machine understand the normalized data

Machine Learning (ML) and other custom algorithms are then employed to perform geospatial reckoning, sentiment analysis on five dimensions, and deep inter-entity relational links.

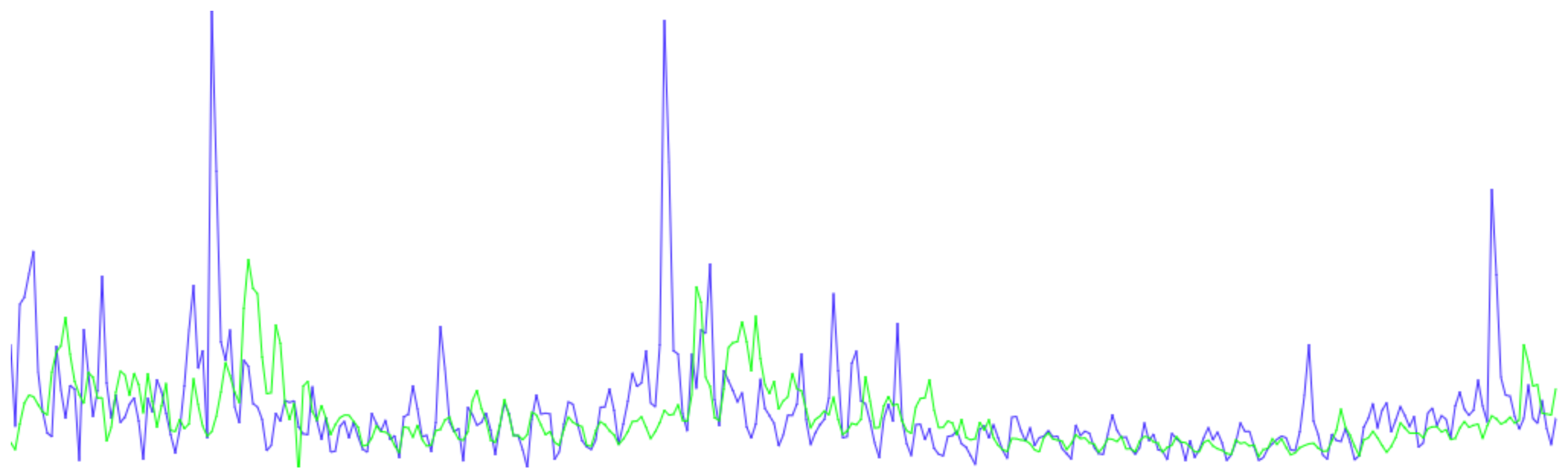

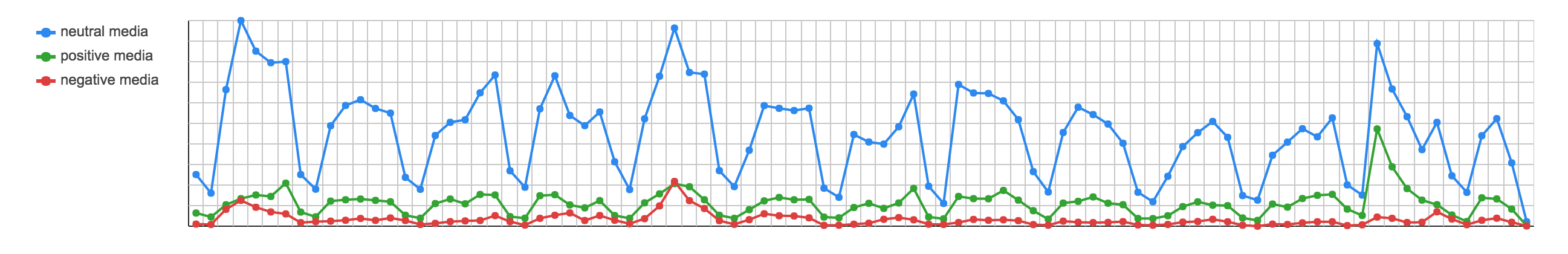

From this the sentiment for BREXIT can be quickly retrieved. It should be noted that for BREXIT a negative or positive sentiment is not an indicator of the overall medias intention of wanting an exit. An article could positively agree for either leaving or staying. This is where the graph DB is used to retrieve the complex entities relationship cluster link, along with time and sentiment.

For example, a typical cluster for a pro BREXIT could include:

BREXIT -> “immigration” positive

And for an anti BREXIT:

BREXIT -> “weak pound” negative

As can been seen from this, sentiment alone isn’t an indicator of a desire for an EU exit, the relationships are also needed to determine desire.

Cross-correlate the data and run advanced algorithms

This is the area where big data comes into play. Using statistical analysis from large data sets, biases and outlier data can be removed, thus keeping the ending results clean. Entity related trends are determined along with sentiment mood.

Further Spectral Regression is applied to predict the next 7 days based on historical patterns within the scope of the entity and its related cluster terms.

Produce and present the prediction

The results from the sentiment analysis of the clusters is then simply presented to the user as a percentage chance of binary event of Britain exiting or staying in the EU.

From correlating social media to news media, it was discovered that the news media sets the trends and opinion choices for the masses. The masses turn to social media to further express an opinion that they derived from the news media. Tracking unbiasedly the news media allows you to track the masses because of the scale of numbers. This is way the system works with such precise results, although simple in principle, it has been shown in the paper it’s complex in logistics.

Results

The data as of April 10 2016 shows a final result of 40% for Britain leaving the EU. Historically the data shows as always being a close vote, but in the run up to the final days the gap is lessening. From this consistent diminishing historical gap, the likelihood for last minute swings has a low probability, but still shows as potentially possible if a dramatic media campaign has still to occur.

In closing

The system discovers ~80,000 new entities per day. This number varies depending on new news topics. This is key to keeping the results relevant, tomorrows news won’t be the same as todays and over years’ large topic drifts would occur.

A good example is Apple computers. 20 years ago nobody would future associate them with phones and music and because the system can make these associations it can better sentiment analyze which in tern correlates to stock price because they are a very consumer driven company.

Another example is Donald Trump; in early 2015 someone asked if the system had a bug because it was showing Donald Trump in the top 8 for winning the US presidency. This was before he announced that he was running, so it looked ridiculous to see his name amongst the other candidates. I simply told the person who reported the bug that the system determined, from the data relationships that he may plan to run and if so has a statistical chance of winning. Further shock happened when the system increased his chances even through he was garnering so much negative press.

Steven