I recently learned how baby chicks follow their parent around, observing and copying, they do this to learn basic patterns to be able to adapt and survive. I wondered if the same could be applied in a game? The AI would consist of a neural net and AI bots would follow a human player around learning from their behaviours.

As a foundation I choose to use WebGL and the Legend of Zelda:Link to the past graphics. Besides being a great game, it also provides a rich environment.

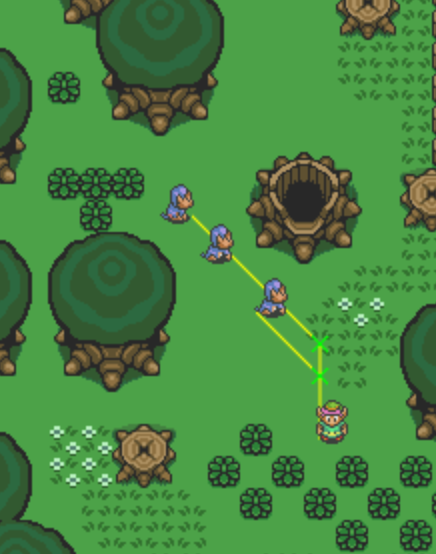

I created a player control (Link) and Kiki the Monkey bots. The bots (Kiki) “watch” the player and try and follow him around, learning as the go by reacting to negative events. Each bot would have feelers, these are projected lines that look for contact with objects and walls. This contact feeds a negative input to the neural net, the harder the contact the more negative the weight, this naturally trains the net to avoid objects. Why code object avoidance when you can train it.

The net, when propagated for a result, returns based on the sensory inputs the best direction of travel. This also gives a great side action of if a player or other object get too close to a bot the bot moves away. As a group it appears like they have a central control routing them all in a complex manor.

Something I still would like to add is a reward system i.e. health pickups, this should had another layer of complexity to the perceived intelligence.